In this recipe we are going to look at how to setup a postgres container from a database backup file.

When you do not know my recipes take a look at the introduction, or check out all recipes there are.

As usual in the recipe section we will look at the following:

- The problem (or the why) to solve

- What do we actually do with this

- How are we going to do it

- Caveats

- Advanced features

The accompanying git repository can be found here. It can already be used by executing the compose.sh or simply by running the commands in the How section. If you want to set it up yourself run the clean.sh first and then follow along.

On the other hand you can fully create everything you need from scratch by simply following this post.

The problem to solve

Have you ever needed to quickly restore a given backup of a database, schema, table or any other part? For example to to test the database locally or to set it up as a fail safe on another machine or for a revert of a broken deployment? Then with a containerized postgres instance and a backup file of the database this can be done super quick.

What

This recipe consists of pulling or rather creating the backup of the postgres database and storing it.

With this accomplished we setup a docker container with a postgres instance running in it and update the database with the created backup file.

How

We will now go through the following steps to create the backup of the database in a postgres container.

- Create a sample database with two tables and sample data

- Create the actual backup by using psql cli tool

- Pull postgres image from dockerhub and create a container with it

- Copy the file to the running container

- Use pg_restore to update the running instance with the backup file

- Connect to the database in the container and check if all is well

- Ease the process with an extended Dockerfile

Create the sample database

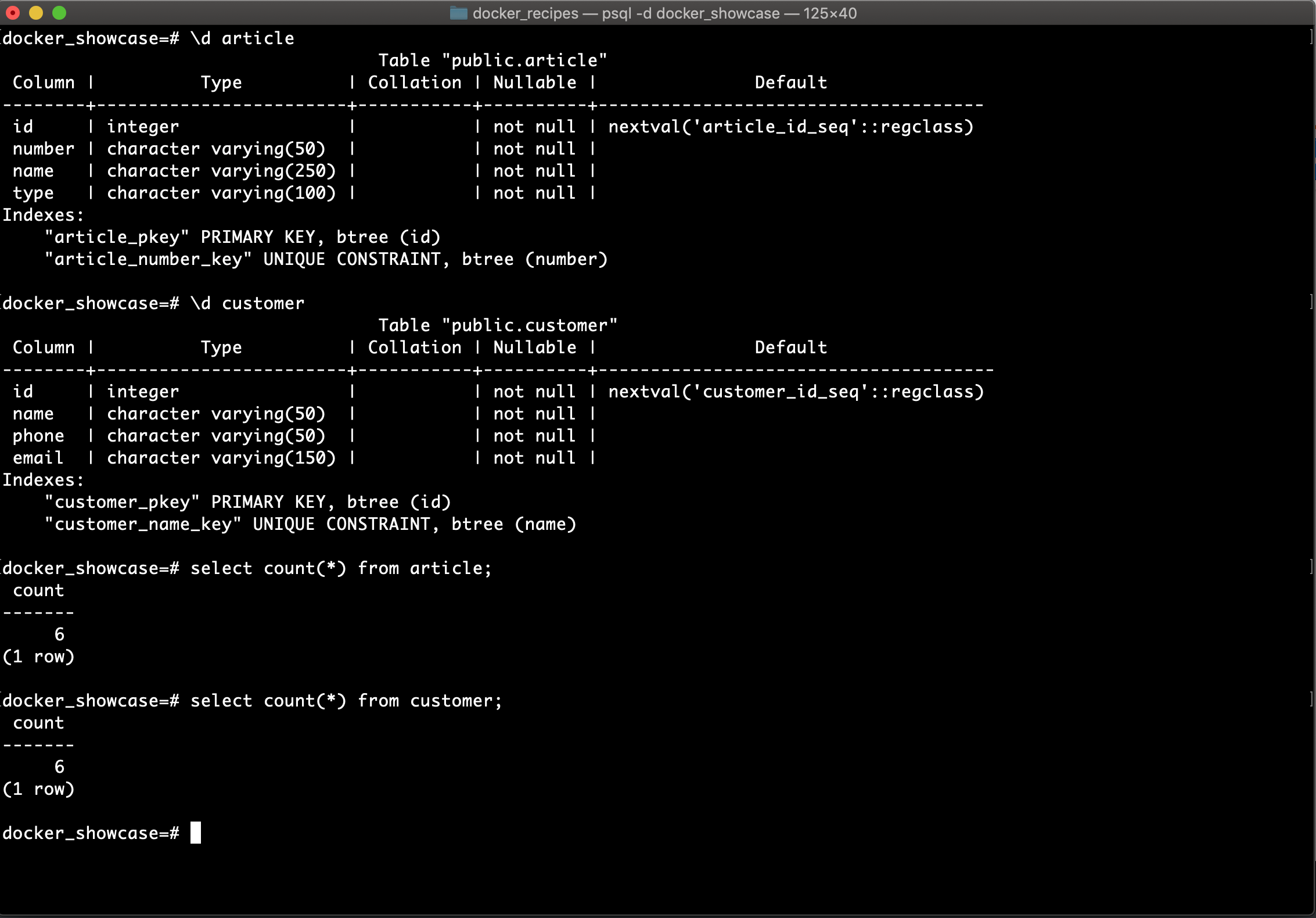

I will only show the output of the simple database. If you want to recreate it look at the github repository for this blog post. In particular take a look at the init_db.sh script file.

Create backup of the database

In the directory of the repository we either execute the backup_script.sh script or run

pg_dump -h localhost -p 5432 -d docker_showcase \

--format=c --file=backup.bak

| pg_dump | the postgres build in command for database backups |

| -h | specify the host (here localhost) |

| -U | specify the user you are connecting with |

| – -password | (I left out the password, because I set it as an env variable) |

– -format=c |

default is plaintext, but we want c for custom to be able to restore individual tables and such |

| – -file | your output file: backup.bak |

| -d | database that should be backed up: docker_showcase |

Create a container with the backup

You can either pull the postgres image from the docker hub like this:

docker pull postgres:latest

or simply start a postgres container like so, which will pull the image automatically:

docker container run -d --rm -p 5433:5432 --name db_backup \

-e POSTGRES_DB=docker_showcase \

-e POSTGRES_USER=docker_user postgres:latest

| -d | runs the container in background |

| –rm | removes container when it is stopped |

| -p | maps the exposed port from inside of the container to that one of the docker host: -host_port:container_port- |

| – -name | specify the container name for easier use in commands, here db_backup |

| -e | specify Postgres environment variables |

| postgres:10 | the image to be used for this container |

Update container database instance

With the container in place we now need to run the pg_restore command on the container. This will be done with the docker container exec command which allows us to jump into a running container and execute a given command.

Because for the command the context will now not be the docker host but the container itself we need to get the backup file inside the docker container first.

Because we already have a running container we will do this by the following commands:

docker container exec -i db_backup mkdir -p /backup/

and copy the backup file from the current directory to the container.

docker cp ./backup.bak db_backup:/backup/

Finally run the restore with the pg_restore cli tool against the container:

docker container exec -i db_backup pg_restore -d docker_showcase -U docker_user \

-h localhost /backup/backup.bak

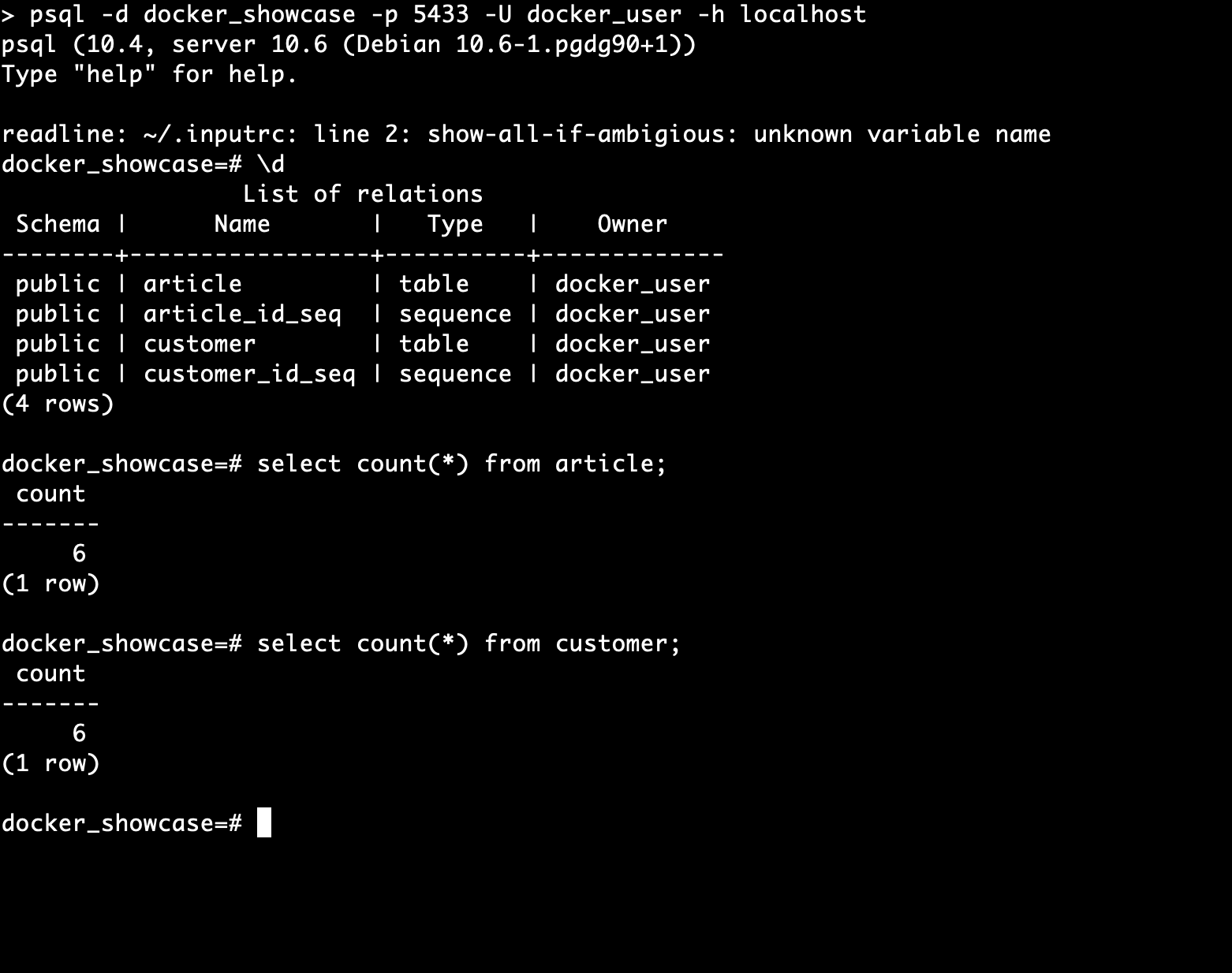

And then with connecting to the container we can see that it worked:

Dockerfile to ease the process

All those commands are cumbersome and there is already a solution that saves us at least some steps. We can utilize a Dockerfile and run docker-compose for the setup. (of course you could as well use the script from the repository)

FROM postgres:11 # ENV POSTGRES_USER docker_user # --> we use postgres in this example ENV POSTGRES_DB my_database # create directory if it does not exist RUN mkdir -p /backup/ # copy bakup COPY ./path_to_backup_dir/backup.bak /backup/

This can be invoked by building the image first:

docker image build . -t postgres_db:backup

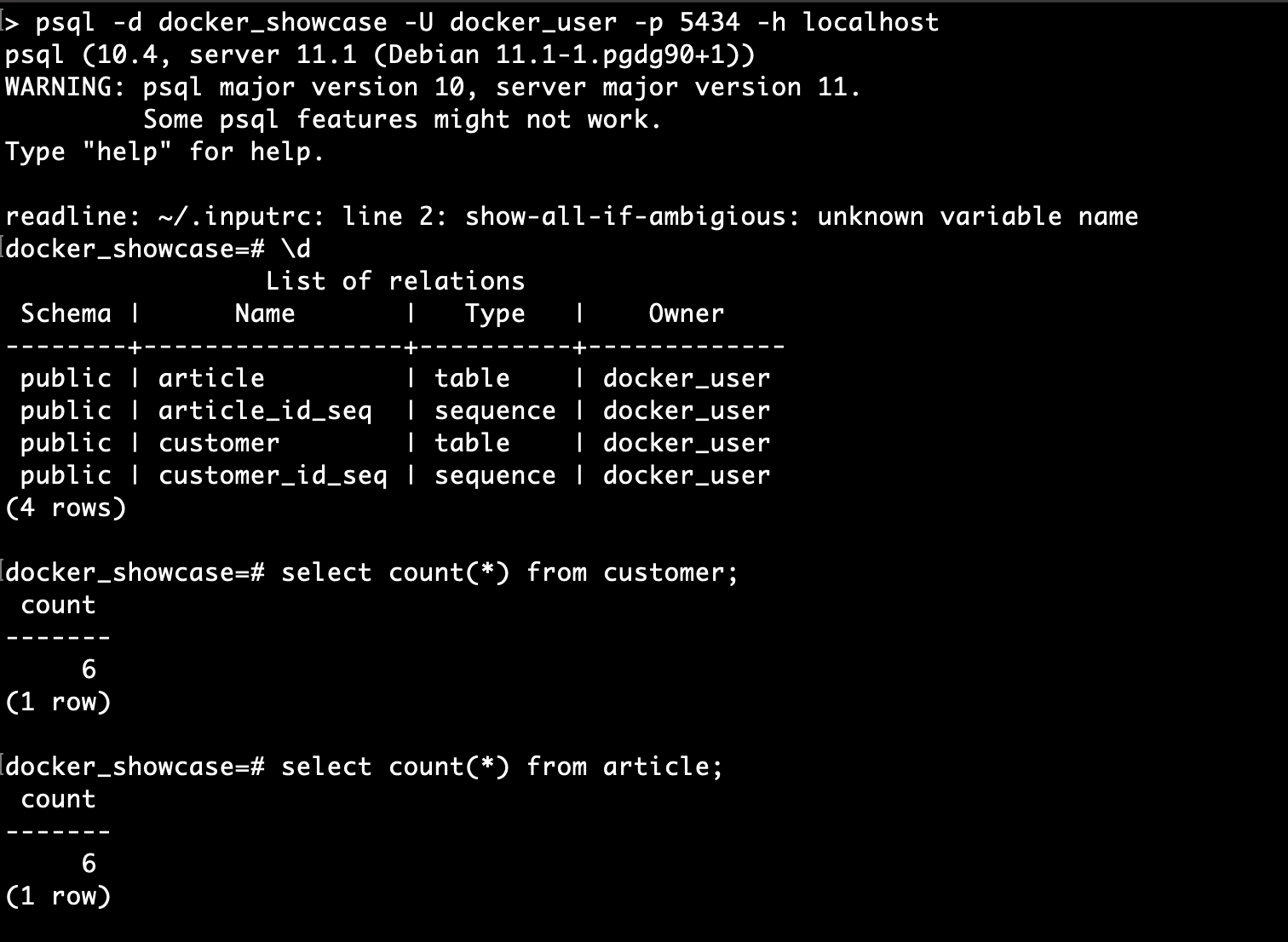

Then run this image by setting up the container again and run (notice that I use now port 5434 on the docker host, because I expect the other container to still be running. Change that at will and remove the other container with the

docker stop command)

docker run -d --rm -p 5434:5432 -e POSTGRES_USER=docker_user \

-e POSTGRES_DB=docker_showcase --name backup_postgres postgres_db:backup

and restore with the same command as before.

docker container exec -i backup_postgres pg_restore -d docker_showcase \

-U docker_user -h localhost /backup/backup.bak

At last you can again check if the restore worked.

Caveats

- docker container run needs a -p with 543X:5432 where X <> 2 if a local postgres is running

- CMD [“pg_restore” …] does not work in Dockerfile because the container is not running then

Advanced features

- Only update a single schema

- Instead of the docker cli for copy and running pg_restore, use a docker compose file instead (actually there is one in the repository)

- Update directly with Dockerfile and initdb from sql script instead of .bak

0 Comments