in this post we will explore how to connect a dotnet web api that is running in a docker container to a postgres database that is also running in a docker container via docker compose. The reason for us to do this, is to help automating our setup, e.g. for deplyoment or integration testing.

This is a good idea, because you can incorporate this setup in your CI pipeline or simply make your integration tests available on all machines without a huge configuration orgy (which is quite boring and often frustrating).

What this post will cover

- Setup web api in docker container with custom docker image

- Setup postgres in docker container and run init.sql script with custom docker image

- Run all of this with docker-compose

- Automate all integration test calls to the api with a bash script and cUrl

What this post will not cover

- Deep dive into docker compose or docker in general

- Asp net core web api basics

Also this post will show you one way to do this, there are of course several other possible ways.

This post will include the following steps:

- Custom image for asp net core web api

- Custom image for postgres db with an init.sql script run inside

- Create sql script from ef core for automated database initialization

- Run the containers and connect them

- Run all this in a single step with a compose file

The following pitfalls made it hard for me and gave me the reason to write this post:

- postgres in container should expose port 5433 (or any other than 5432) if you have a local postgres installation on your machine that is running

- you cannot use localhost to connect to another container from within a container, because it refers now to the container itself and not the operating host (man I wasted way too much time with this issue even though it seems trivial now)

- Caution with .dockerignore. I put the init.sql script in this file but then the image would not build correctly because this is not included in the docker image (makes sense, but I in my endless stupidity I still did this )

- As we will later see, the connectionstring with localhost will not work from inside of a container. If you want to use ef core still, you will need this one to work. My solution: use different environments for this to work (e.g. integration for the docker compose and development for localhost)

Requirements to work this examples

For you to follow the examples of this post you need to have the environment set up. This requires you to install the following parts to your system:

- A postgres installation on your local machine (see here for MacOs) to use the psql command line tool so you can check if the postgres container works, as an alternative you could connect to the docker container with exec -it yet I prefer it to do this from the host

- dotnet sdk and dotnet runtime (Dotnet core) for development of the API

- obviously you will need docker, which you can get here

- an editor, I like to use VS Code, but see my series on IDE´s to help you find your favorite

Also we need the following directory structure at the root of the project file that looks like this one, or similar to this ( you do not need to have exactly the same names, but it will be easier this way. or simply download the source code from github)

What does this give us?

I ran into this scenario when I was creating some automated integration testing for a build pipeline, where the web api should be set up for each test as close to the production system as possible. The tests then where run with a bash script, that send off some requests via curl to the actual application and checked for the expected status codes. (I will include a sample script that can be viewed in the github page and at the end of this post, but without the status code checking. For that see my post on test your web api)

For each full test run, the container of the application and the database where build from an image and run as a container. Then when the script had run all requests, the containers where stopped and disposed. This is the blueprint for this post, because it is a quite common scenario for integration testing in a contionous integration/delivery environment IMHO.

This post will obviously have a slightly less complicated application, but the pitfalls of this approach will still be visible in plain sight. Also because this is a common scenario it can be slightly tweaked to be applied to other databases and applications.

So without further ado lets get into it.

Custom image for the asp dotnet web api

For the web api we create the following docker file that is called api.Dockerfile:

FROM microsoft/dotnet:2.1-aspnetcore-runtime AS base EXPOSE 80 FROM microsoft/dotnet:2.1-sdk AS build WORKDIR /src COPY ./src . RUN dotnet restore -nowarn:msb3202,nu1503 -p:RestoreUseSkipNonexistentTargets=false RUN dotnet publish --no-restore -c Release -o /app FROM base AS final WORKDIR /app COPY --from=build /app . ENTRYPOINT ["dotnet", "integration_with_docker.dll"]

First we need the runtime of dotnet as a base image and expose the standard port for http calls which is 80.

With that image we then proceed with pulling the dotnet sdk so we can build our project inside the container. Obviously before we can do that we need to copy the directory containing the source code to the container itself. Then we restore all packages and build the solution.

At last we create the application and move the entrypoint to point to dotnet and the .dll.

Before we now create the image and container we first need to create the application code. I used the simple ValuesController from the project template for this and tuned it for a simple Model. For this we create a Model directory with the ApiModel.cs, an AppDbContext.cs for the use of EF core. With this setup we can then use migrations on startup of the docker containers to create the database. In this code I still use an init.sql script to create the database, but this was generated from dotnet ef core CLI. This is a similar approach we would use in production. To view this code in detail, pls refer to the github project as it is not the focus of this post.

To create an image from this we run the following command from the root directory of the application.

docker build . -t t-heiten/api:test .f api.Dockerfile

Custom image for postgres db

There is already a postgres base image that you can find on the dockerhub.

In this post we will be using the one with the tag alpine because it has a very small size and leads to small image sizes in this way.

But our container cannot simply only use this image, because we want to run a init.sql script to setup a basic database that can be written and read from when the api connects to it.

So the image will look like this and be called postgres.Dockerfile:

FROM library/postgres:alpine ENV POSTGRES_USER integration_user ENV POSTGRES_DB integration_db EXPOSE 5433 RUN mkdir -p /docker-entrypoint-initdb.d/ COPY init.sql /docker-entrypoint-initdb.d/

As you can tell we use the base image from the dockerhub with the tag :alpine as mentioned above.

We then set environment variables for postgres_user and the name of the database.

Also we Expose the port 5433. this is important, because the standard port of a postgres installation is 5432, and if you have an instance of postgres running it will most likely be on port 5432 and might block the use of this container. There are other possible solutions to this problem, but I choose this easy approach.

Next up we create a directory for the initdb, which is essential to run the init.sql script for inital database setup. Note the -p flag to create the directory if not exists, or ignore it if it already exists.

The last command runs the init.sql script in the database if the container is started. The script can be seen in the github project, like the code for the api.

Now test the image and the container with the following commands:

docker build . -f postgres.Dockerfile -t t-heiten/my_db:test

which results in the following output

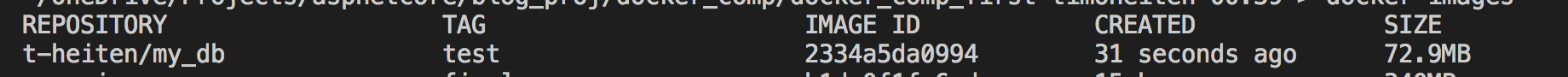

docker image list

which then results in:

So we now have the image, tagged as test with the name t-heiten/my_db.

Create init.sql from EF core migrations

Even though I will not show the contents of the init.sql, I still want to provide you with the general idea of how to create it.

- Add DbContext, Model and Repository (see for details my post on aspnetcore essentials)

- Add with following commands the needed postgres packages for Ef core:

dotnet add package Npgsql && dotnet add package Npgsql.EntityFrameworkCore.PostgreSQL && dotnet add package Microsoft.EntityFrameworkCore.Design

(make sure to have the right versions- see here)

from ./src directory to the project file. - Add with the following command an initial migration from the dbContext and the specified Model:

dotnet ef migrations add initial_migration -o Migrations

- Create script with:

dotnet ef migrations script -o ../init.sql

Next up we will run the containers and check if everything is wired up correctly.

Run both containers in isolation

Before we get to the final automation step, we first set up the containers individually to see if it works as intended.

For this simply run the following commands, inspect if all works:

docker container run -p 5000:80 -d --rm --name t-heiten_api t-heiten/api:test docker container run -p 5433:5432 -d --rm --name t-heiten_db t-heiten/my_db:test

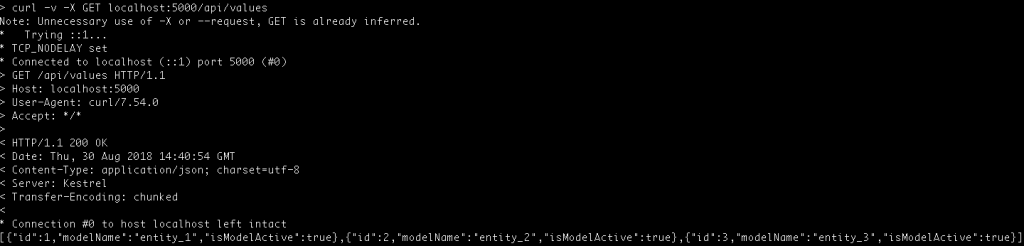

Simply check if the containers work properly with a curl request to the api:

curl -X -v GET localhost:5000/api/values

Which yields a status code of 500: Internal server error. When we check the logs generated by the docker container of the api, we can see that the connection to the database fails. This is probably the issue I struggled the most with.

To check this you run the following command:

docker logs t-heiten_api

or if you are using VSCode, use the following Extension and right click the container named t-heiten_api and show logs from the drop down:

So the issue here is:

When you have the api in a docker container and your connection string points to localhost, it is now the localhost of the container context and not of your local machine. Which makes sense I guess, but was hard to figure out for me.

So to compensate this issue we have different methods, from a custom network to linking or adding extra hosts. I use the latter because it works well with the final automation step.

If you use a network you also would require a load balancer to be set up, because then the containers in the network could not be reached from the outside. We are going to explore this in a dedicated post on networks with docker and asp net core. And the linking is like the network but a legacy feature that will at some point not be supported any longer. So I simply avoid to use it in any case.

We simply change the run command of the docker container and the Connectionstring in the appsettings:

"Data" : {

"ConnectionString" : "Server=inDockerHost;Port=5433;Password='';User ID=integration_user;Database=integration_db"

},

docker container run --rm -d -p 5000:80 \ --name my_api --add-host=inDockerHost:172.17.0.1 api:test

Et Voila it works (the three entities came from Postman, so I cheated a bit;-) )

But this is not sufficent for automated test setup. Sure you could write a script that sets this up, but there is a better way: Docker compose!

Docker-compose to the (automation) rescue

Docker-compose is the built in docker way to create an orchestration of different containers. You can read up on it in this blog or on the official website.

In essence you create a yaml setup file like the one below that pretty much subsumes your docker build and run instructions.

We create two services, the postgres and the api one. Both are build from their respective docker files in each test run. If you are not familiar with yaml, please take a look at this page.

version : '3.5' services: postgres: build: context: . dockerfile: "postgres.Dockerfile" ports: - '5433:5432' container_name: 'db' api: build : context: . dockerfile: 'api.Dockerfile' links: - 'postgres' extra_hosts: - "inDockerHost:172.17.0.1" ports: - '5000:80' depends_on: - 'postgres'

This is then run from the root directory with the following command. To be discovered automatically the file should by convention be named docker-compose.yml (or .yaml at the end)

docker-compose up

This will now build and run your container according to those descriptions.

We test the result again with curl, which yields the same output as above (again I created the three entities with postman)

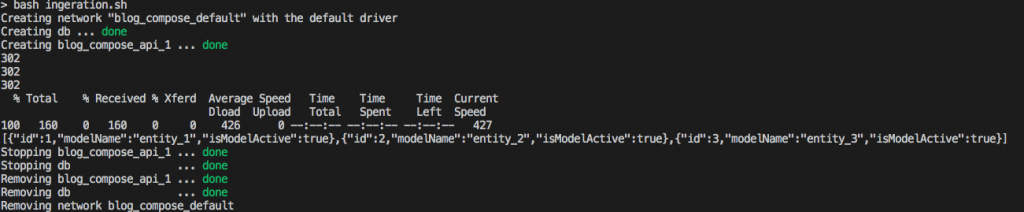

Bash Script all the things

With this file in place we can now use this command in a script for our automated integration testing. Which then can be run in a CI pipeline.

See the following script with simple curl statements and setup as well as teardown code for the containers.

You could extend this in a lot of directions, like with checking status codes and returning exit codes etc. But you get the general idea.

#!/usr/bin/env bash

# docker compose up with -d flag for in background

docker-compose up --detach

# simple wait for compose to run to end

canConnect=false

while [ "$canConnect"=false ]; do

result=$(docker container exec -i integration_db psql -h localhost -U integration_user -d integration_db -lqt | cut -f 1 -d \| | grep -e "integration_db")

printf "."

if [[ "integration_db"="$result" ]]

then

canConnect=true

break

fi

done

# insert 3 entities and print status code to stdout

curl -s -o /dev/null -w "%{http_code}" -X POST -d '{"identifier" : "entity_1", "isactive" : true}' -H "Cache-Control: no-cache" -H "Content-Type: application/json" http://localhost:5000/api/values

echo ""

curl -s -o /dev/null -w "%{http_code}" -X POST -d '{"identifier" : "entity_2", "isactive" : true}' -H "Cache-Control: no-cache" -H "Content-Type: application/json" http://localhost:5000/api/values

echo ""

curl -s -o /dev/null -w "%{http_code}" -X POST -d '{"identifier" : "entity_3", "isactive" : true}' -H "Cache-Control: no-cache" -H "Content-Type: application/json" http://localhost:5000/api/values

echo ""

# get model values and print to stdout

model=$(curl http://localhost:5000/api/values)

echo $model

# kill containers:

docker-compose down

When you run this command it will output:

Summary

In this post we created a simple AspNet core web api that is connected to a postgres database. This does not sound shockingly interesting. The interesting part about this is, that we used docker containers and built in docker techniques (at least built in in docker for mac) like docker compose to automate the setup of the application.

This was all done with the one purpose of integration testing as an automated step in mind.

We looked at some issues and pitfalls along the way and how to overcome and solve them.

I struggled a while to solve this, and hope it helps you or inspires you to overcome the same or similar issues

0 Comments